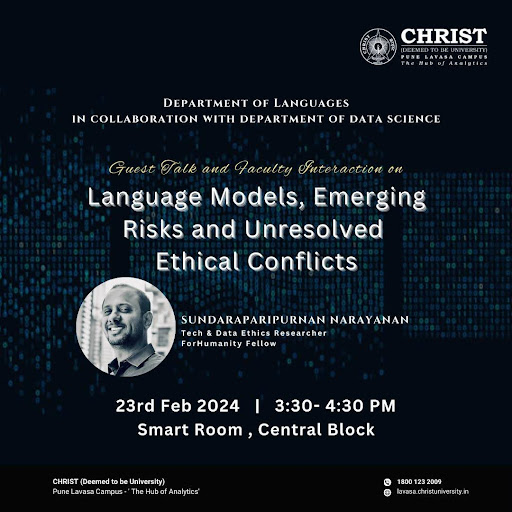

Guest Talk and Faculty Interaction: Language Models, Emerging Risks and Unresolved Ethical Conflicts

ABOUT THE EVENT

The Department of Languages, School of Arts and Humanities, CHRIST (Deemed to be University), Pune Lavasa Campus in collaboration with the Department of Data Science organized a Guest Talk and Faculty Interaction on “Language Models, Emerging Risks and Unresolved Ethical Conflicts” by Mr Sundaraparipurnan Narayanan on 23rd February 2024. A ForHumanity fellow, Mr Narayanan is a data ethics researcher, podcast host and article writer. He is also a member of the Advisory Board of the Department of Languages, and this visit marked the beginning of a promising academic and research collaboration. In the first part of his talk, Mr Narayanan explained some problems caused by language models. When used extensively for translation purposes language models can lead to bias. Open-source language models like No Languages Left Behind (NLLB) can translate from 200 languages. However since the error percentage in machine translation (18%) is much higher than that of human translation (4%), the output may be riddled with instances of mistranslation, misinterpretation, misinformation and information pollution. He gave the example of a monolingual person reading newspaper reports in translation, day after day. In the second part of his talk, Mr Narayanan tried to address the problems inherent in today’s language models. While these models understand the relationship between words, having been built on the principle of word embedding, they do not understand the relationship between humans which is crucial to a proper understanding of the way languages work.

Thus, they are unable to comprehend the meaning of words that do not explicitly represent relationships (ex: spouse’s spouse). They do not take into account the sequence of words, only the position of words. As a result, they also fail to comprehend sarcasm and cannot handle ambiguity, in contrast to human beings who have evolved from understanding sounds to words and can thus grasp sarcasm well. Language models are built on the foundation of possible probabilities rather than facts. They are vulnerable to manipulation and succumb to pressure. Since language models lack common sense and domain knowledge, they must be deployed with caution. These models can be used well only when their limitations are well understood. Finally, the speaker bemoaned the disastrous future of regional languages, thanks to the Eurocentric design of basic computer systems as well as advanced language models leading to English becoming the preferred language choice for expression and content creation. He expressed dismay that owing to generative AI and our overdependence on such tools, human beings may eventually lose the neural connection with language. Speculating on the job market, he stated that the next three to five years would likely be characterized by a focus on developing such AI tools further. The next five to eight years may be spent on specializing in the use of these tools. However, in ten years, he predicts a renewed appreciation of human beings who can employ their minds over and above leveraging technology. The talk ended with a Q and A session followed by department-level interactions to identify potential areas of collaboration.

OUTCOMES OF THE EVENT

Outcome 1: Understand language models, risks and conflicts

Outcome 2: Appreciate emerging ethical questions in the age of AI

Outcome 3: Explore opportunities for research and collaboration

.png)

Comments

Post a Comment